Objective To test the ability of the ChatGPT 4.0 to provide accurate patient and physician level information about abdominal aortic aneurysms (AAA) and to assess the alignment of responses with the Society for Vascular Surgery (SVS) guidelines for the care of patients with AAA.

Methods Fifteen questions were developed to test the ability of ChatGPT 4.0 to provide patient level information about AAA. A subset of the current SVS clinical practice guidelines (CPGs) on AAA (N=28) were chosen and converted into 37 questions to query ChatGPT 4.0. Four additional questions were specifically designed to query for the annual risk of rupture for AAA at varying diameters. Single responses from the GAI-bot were recorded without further interrogatory. Ten board-certified vascular surgeons and one fellow independently graded the accuracy of responses using a 5-point Likert scale where 1=very poor, 2= poor, 3=fair, 4=good, 5=excellent.

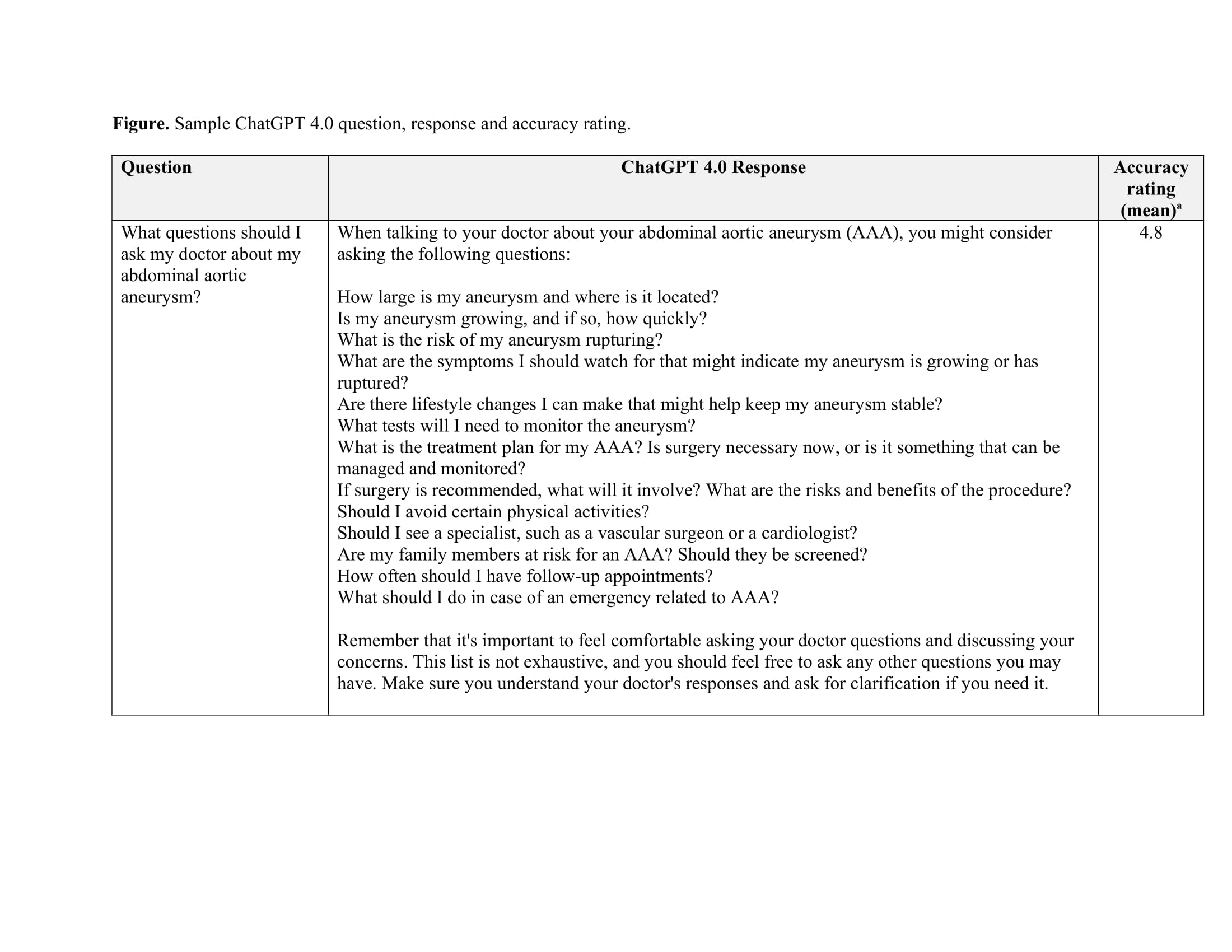

Results The mean of the means accuracy rating across all 15 patient level questions was 4.4 (range 1.8-4.9) (Figure). Only one response was rated very poor to poor for accuracy. ChatGPT 4.0 demonstrated good alignment with SVS practice guidelines (mean of the means 4.3, range 2.6-4.9). The accuracy of responses was consistent across guideline categories; screening and surveillance (4.3), indications for surgery (4.5), pre-operative risk assessment (4.7), perioperative coronary revascularization (4.1), and perioperative management (4.2). ChatGPT 4.0 accurately cited the SVS Vascular Quality Initiative AAA mortality risk predictor but failed to list and innumerate practice guidelines when directly queried. The GAI-bot demonstrated only fair performance in answering the annual risk of AAA rupture (mean of means 3.5, range 3.1-4.1).

Conclusion ChatGPT 4.0 provided accurate responses to a variety of patient level questions regarding AAA. Responses were well aligned with current SVS practice guidelines except for inaccuracies in quoting the risk of AAA rupture for aneurysms of varying diameters. The emergence of generative AI bots presents an opportunity for study of potential applications in patient education and to determine their ability to augment the knowledge base of vascular surgeons.